LinkedIn pitches itself as a social network for your professional life, proclaiming itself to be “the world’s largest professional network with more than 1 billion members in more than 200 countries and territories worldwide.”

Many of us use it to promote ourselves, our companies, and our services; to seek employees or employment; and to publish our own professional content and commentary such as videos and articles.

However, as we’re all aware, or should be, there’s a lot of low-quality, disingenuous content on LinkedIn, often masquerading as legitimate posts by accounts with believable profiles and mugshots.

Sometimes, this rogue content, which often goes unchallenged by LinkedIn and Microsoft (the owners of LinkedIn), is fairly obviously what’s known as slop, today’s pejorative term for genuine-sounding but bogus articles or research pieces that have been churned out to a formula by AI.

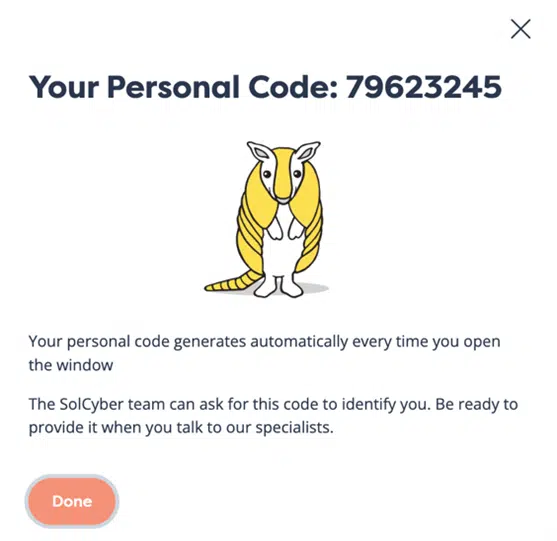

If you’re a LinkedIn user and you’re not yet following @SolCyber, do so now to keep up with the delightfully useful Amos The Armadillo’s Almanac series. SolCyber’s lovable mascot Amos provides regular, amusing, and easy-to-digest explanations of cybersecurity jargon, from MiTMs and IDSes to DDoSes and RCEs.

Even if you know all the jargon yourself, Amos will help you explain it to colleagues, friends, and family in an unpretentious, unintimidating way.

In the field of cybersecurity, this sort of disingenuous content often seems to have been constructed using what you might call “back-to-front science,” where what’s generally known as scientific method is sneakily run in reverse to create surprisingly believable “evidence” to sell what is sometimes nothing more than cybersecurity snake oil.

This cart-before-the-horse approach to evidence typically runs like this:

Clearly, cybersecurity isn’t the only field in which unjustified, and sometimes unjustifiable, scientific conclusions are peddled to attract investors, customers, and influencers.

Anyone who followed the rise and fall of Elizabeth Holmes and her biotech company Theranos in recent years will be well aware of this.

But there is nevertheless plenty of snake oil in cybersecurity, and some of the regularly-repeated pseudoscience pitches on LinkedIn that we have felt it necessary to debunk recently include:

Sadly, claims of this sort can be difficult to contradict.

Those who peddle unsupported or unsupportable research can always argue along the lines of, “Following our advice won’t do any harm, but even if your counter-advice is correct right now, the risk could change in the future, which would make your counter-advice actively dangerous.”

This is clearly nonsense, because you don’t have infinite time and money to expend on cybersecurity, so every useless “precaution” you take forces you to miss out on a genuinely useful cybersecurity product, service, or mindset that you could have adopted instead.

It’s also an unattractive admission to say, “OK, so we’re deliberately telling a lie to attract clicks, but it’s a harmless lie that gets people interested in cybersecurity, so there is no justification in calling it out by reporting the truth instead.”

Another bogus argument you will often hear to support fake cybersecurity research is an “appeal to higher authority,” where the higher authority is merely alluded to, and the claimed counter-evidence is never provided, using “secrecy” as an excuse.

These counter-arguments are often pitched in a superior and self-righteous tone, such as, “You are clearly unaware of the state-of-the-art work by our security services that isn’t public yet.”

(Quite how and why the commenter is not only party to these secrets but also happy to expose their existence on social media is never explained.)

Would it help if LinkedIn were to turn Microsoft’s much-vaunted cybersecurity prowess with AI into detecting cybersecurity-oriented posts and comments that fly in the face of good science, good engineering, and good research?

Even better, what if LinkedIn were to scrape everyone’s posts to mine them for science-and-engineering truths, in the hope of training its own AI to detect the cybersecurity scams and half-truths promoted by unscrupulous AI-assisted marketing teams?

And what if LinkedIn were to make this scrape-and-train process the default for everyone?

After all, making it strictly opt-in might lead to most users declining to accept, while dedicated scammers would be careful to opt in, thus ensuring that the bulk of this training happened against pre-tainted data.

Well, LinkedIn has indeed adopted an opt-out approach to scraping-and-training its AI based on what you publish on the platform.

There’s no suggestion, however, that this decision was based on boosting cybersecurity rather than on improving LinkedIn’s own commercial activities in its primary revenue-generating fields of advertising and recruitment.

Maybe, just maybe, this will improve the platform’s ability to limit the effectiveness of fake cybersecurity campaigns․․․

․․․but it also means that your own articles and comments will be training LinkedIn to produce what is effectively content that competes with yours, and you may be worried that the platform will promote its own derivative material more aggressively than it promotes your original content.

And while LinkedIn rolled out this “option” some time ago in the US, it has this week extended its reach to other parts of the world, including Canada, the UK, the EU, Switzerland, and Hong Kong.

If you want to opt out, which you can do reactively in those parts where AI scraping has already been activated, and proactively where it has yet to be turned on, head to Settings > Data Privacy and find the option named Data for Generative AI Improvement.

In a browser, you access to the Settings screen by clicking the Me icon in the top right half of your home page and choosing Settings and Privacy:

In the app, tap your avatar icon in the top left corner and choose the Settings cog in the popup menu that appears:

Choose the Data for Generative AI Improvement option:

And ensure that the toggle is set to Off:

Review the rest of of the settings in Settings > Data Privacy, too, because there are other opt-out settings than you may not yet know about.

While you’re about it, you might want to wander around the options available in Settings > Advertising Data as well, just in case there are any data-scraping surprises that you have been opted into automatically:

By the way, it’s worth taking the time to explore the data collection and privacy settings available in all the social media apps you use, just to make sure that you aren’t oversharing, and that you haven’t “agreed” by default to data harvesting settings that you don’t want.

Remember these simple rhymes: Be Aware/Before You Share, and If In Doubt/Don’t Give It Out.

If you’re overwhelmed by the complexity of the cybersecurity choices facing you and your company, why not seek a helping hand from SolCyber’s unashamedly human-centric cybersecurity service?

Signing up with SolCyber will not only save you time and money, and free you up to concentrate on your core business, but also proactively improve the cybersecurity knowledge and attitude of all the humans in your business, too!

Why not ask how SolCyber can help you do cybersecurity in the most human-friendly way? Don’t get stuck behind an ever-expanding convoy of security tools that leave you at the whim of policies and procedures that are dictated by the tools, even though they don’t suit your IT team, your colleagues, or your customers!

Paul Ducklin is a respected expert with more than 30 years of experience as a programmer, reverser, researcher and educator in the cybersecurity industry. Duck, as he is known, is also a globally respected writer, presenter and podcaster with an unmatched knack for explaining even the most complex technical issues in plain English. Read, learn, enjoy!

By subscribing you agree to our Privacy Policy and provide consent to receive updates from our company.