AI in infrastructure is suddenly everywhere. In Japan, a state-of-the-art AI-powered earthquake recognition system will help authorities detect the epicentre of a Nankai Trough earthquake more accurately. In Seoul, AI waste bins separate plastic types automatically for better recycling. And Dubai is using AI to improve road safety.

One key technology in AI that’s found a footing in public infrastructure is image recognition technology. At airports, facial recognition technology (FRT) is being increasingly used to speed up security checks. And in five cities across Maryland, USA, a company called Obvio has deployed AI-powered cameras at stop signs to detect traffic violations. The cameras detect cars running stop signs, illegal turns, distracted driving, unsafe lane changes, and other violations.

When a violation occurs, the camera processes the offending vehicle’s license plate number, matches it to the state’s DMV database, and then sends all the information to law enforcement.

As ground breaking as all this innovation is, it also opens the door to dangerous cybercrime. AI systems are high-value cyber targets due to their real-time, high-volume data collection that is especially valuable when it contains personally identifiable information (PII).

Traffic cameras like Obvio’s, store sensitive data like license plate numbers, driver behavior, and images of people. However, FRT systems pose even greater risks. A breach could lead to identity theft or unauthorized surveillance.

Indeed, unauthorized surveillance is already a concern in the Asia-Pacific area, which lacks comprehensive governance frameworks to regulate AI. Acting on concerns that such surveillance could pose a national security threat, in 2022, 67 MPs called to ban certain Chinese CCTV cameras from being used in the UK.

In this article, we look in-depth at the cybersecurity risks associated with using AI in civic systems and what can be done to make these more secure.

AI-powered systems rely on high-volume data ingestion. In Obvio’s case, this includes vehicle movements, timestamps, and potentially identifiable information like license plates or driver behaviors. This massive data flow makes them attractive targets for cyberattacks.

Obvio only processes the data locally on its cameras and purges any data older than 12 hours. It only transmits data when its AI detects a violation. Still, the municipalities involved can all remotely access the cameras.

This isn’t a bash against Obvio specifically, but rather a comment on the danger of AI as a technology in general.

Likewise, none of this is intended to say we shouldn’t develop AI tools that improve road safety. However, it’s vital that anyone creating such a solution is aware of the risks associated with the technology.

Encryption can be flawed for both at-rest and in-transit data, leading to potential breaches.

In the rush to get a product to market, startups might also rely on any number of suppliers, which could potentially lead to supply chain attacks. A supply chain is only as strong as its weakest link, as we saw in the notorious SolarWinds and MOVEit hacks.

Any vulnerable endpoint could disclose data in an AI system. In Obvio’s case, it would only disclose 12 hours of footage. In other systems, it might disclose more than that, depending on the endpoint’s nature and how much on-device processing it performs.

As systems expand to cover more locations, the number of endpoints multiply, thus increasing the risk.

APIs facilitate communication between endpoints, servers, and external systems, but poor design or security can make them weak links.

For example, an API might grant excessive permissions or have weak authentication practices. APIs might likewise suffer supply chain attacks if they’re hosted on weak infrastructure.

AI models are vulnerable to exploitation through techniques like data poisoning and algorithmic drift.

Data Poisoning occurs when threat actors inject malicious or misleading data into an AI model’s training set.

Algorithmic Drift refers to the degradation of an AI model’s performance over time due to changes in input data or environment, leading to unreliable decisions.

Breaches of AI-powered surveillance systems can result in significant fallout, especially when the available PII in the breach is high.

In the case of a company doing nationwide surveillance of vehicles, the breach could pose significant personal risk for high-profile targets. For example, hackers might be able to track movements of high-profile targets through their license plate numbers and plan an attack accordingly.

It’s conceivable that future AI infrastructure services will include facial recognition, geopositional data, and myriad other data points that could lead to severe risks for the general population. A breach could lead to real-time location data that allows the cybercriminal to track citizens.

Despite its flaws, the usefulness and potential of AI are without question. Campaigning for a reduction of AI solutions is the wrong direction. However, the implementation of AI must be accompanied by sufficient security measures to ensure it’s safe.

Here are our recommendations for how to secure civic tech systems, whether those systems use AI or not.

No user or device should be automatically trusted. Ideally, access to the system should be verified through MFA and other strong identity checks. Networks should be segmented to limit attack spread, even isolating AI components as much as possible. All access should be under the paradigm of least privilege.

Protect sensitive data with robust encryption, such as AES-128 or AES-256. All transmitted data should travel only through encrypted protocols such as TLS, with active blocks in place to prevent unencrypted traffic from going through.

Edge devices should additionally implement hardware-based encryption.

All encryption should be end-to-end.

All systems should be routinely tested to uncover vulnerabilities. Red-team exercises should focus on AI-specific threats like data poisoning.

Penetration testing should include prompt injections, as well as visual prompt injections.

Teams should also thoroughly test traditional attack vectors that could give hackers access to AI models.

Ensure edge devices running AI are built with security in mind by implementing hardware-security basics such as secure boots, minimal exposed interfaces, and signed firmware updates.

Transparent audit trails involve maintaining a clear, tamper-proof record of all system activities to ensure accountability and traceability. This is vital in AI-driven surveillance systems.

As a minimum, all system activities should be logged in a tamper-proof system to create a verifiable record of events.

The system should additionally monitor logs in real-time so it can trigger alerts or respond whenever it detects an anomaly.

Response protocols complement this by providing clear guidance on how to contain and recover from security incidents.

Securing AI-driven infrastructure isn’t only about the tech. Robust policy frameworks and procurement standards play a vital role.

Policymakers should insist that stringent security baselines are met in AI tech procurement, ensuring that all vendors meet those baselines. Policy should also mandate transparency in the design of AI systems.

Effective governance requires collaboration between sectors and professionals, including cybersecurity experts, privacy specialists, legal advisors, business owners, and government officials. By integrating diverse perspectives, governance teams can create holistic policies that balance security, privacy, and ethical considerations, ensuring AI systems serve the public good.

To ensure the safety and trust of the public in AI-driven civic infrastructure, the concerns we’ve raised in this article should be addressed comprehensively from the outset.

Early action is crucial in preventing threats and devastating data breaches. This is true of any tech, but especially so for AI because of the massive volume of data it processes, and the potential to abuse that data.

Implementing zero-trust architectures, encrypting data both at rest and in transit, and conducting routine red teaming are the bare minimum.

It’s not only a tech matter. Policymakers should work proactively to ensure that clear regulatory guidelines and frameworks exist so AI companies can operate securely.

Waiting for breaches to occur before addressing vulnerabilities is untenable. Security should be front and center in anyone’s mind who is developing AI infrastructure.

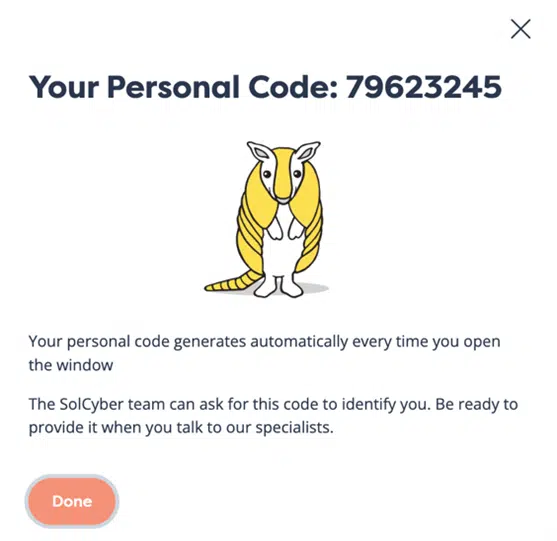

At SolCyber, our cybersecurity experts can help guide your AI infrastructure project as well as provide all the essential services to ensure the project is secure. To learn more about how SolCyber can help you, reach out to us for a chat.

Photo by Tobias Tullius on Unsplash

By subscribing you agree to our Privacy Policy and provide consent to receive updates from our company.