The good news is that Google’s big-news global cloud outage of 2025-06-12 and 2025-06-13 (yes, that was Friday the Thirteenth) was sorted out within a few hours, a creditable response given the scale of the bug.

The bad news, of course, is the point we just mentioned: the sheer scale of the bug.

Intriguingly, the word sheer has two contrasting meanings, though both of them are metaphorically relevant in this case.

Sheer stockings, for example, are so thin and finely woven that they are easily torn and ruined without hope of repair; sheer cliffs, on the other hand, are so solid and vertically intimidating that they are difficult or even impossible to climb.

To give you an idea of just how sheer the bug was, consider that Google has stated that these Google products alone were broken in the outage, meaning that every business, site or service that depends on one of these may have been temporarily affected:

API Gateway, Agent Assist, AlloyDB for PostgreSQL, Apigee, AppSheet, Artifact Registry, AutoML Translation, BigQuery Data Transfer Service, Cloud Asset Inventory, Cloud Build, Cloud Data Fusion, Cloud Firestore, Cloud Healthcare, Cloud Key Management Service, Cloud Load Balancing, Cloud Logging, Cloud Memorystore, Cloud Monitoring, Cloud NAT, Cloud Run, Cloud Security Command Center, Cloud Shell, Cloud Spanner, Cloud Vision, Cloud Workflows, Cloud Workstations, Colab Enterprise, Contact Center AI Platform, Contact Center Insights, Database Migration Service, Dataplex, Dataproc Metastore, Datastream, Dialogflow CX, Dialogflow ES, Document AI, Gmail, Google App Engine, Google BigQuery, Google Calendar, Google Chat, Google Cloud Bigtable, Google Cloud Composer, Google Cloud Console, Google Cloud DNS, Google Cloud Dataproc, Google Cloud Deploy, Google Cloud Functions, Google Cloud Marketplace, Google Cloud NetApp Volumes, Google Cloud Pub/Sub, Google Cloud SQL, Google Cloud Search, Google Cloud Storage, Google Compute Engine, Google Docs, Google Drive, Google Meet, Google Security Operations, Google Tasks., Google Voice, Hybrid Connectivity, Identity Platform, Identity and Access Management, Integration Connectors, Looker (Google Cloud core), Looker Studio, Managed Service for Apache Kafka, Media CDN, Memorystore for Memcached, Memorystore for Redis, Memorystore for Redis Cluster, Migrate to Virtual Machines, Network Connectivity Center, Persistent Disk, Personalized Service Health, Pub/Sub Lite, Resource Manager API, Retail API, Speech-to-Text, Text-to-Speech, Traffic Director, VMWare engine, Vertex AI Feature Store, Vertex AI Search, Vertex Gemini API.

Servers in these locations were affected:

Belgium, Berlin, Columbus, Dallas, Dammam, Delhi, Doha, Finland, Frankfurt, Hong Kong, Iowa, Jakarta, Johannesburg, Las Vegas, London, Los Angeles, Madrid, Melbourne, Mexico, Milan, Montréal, Mumbai, Netherlands, Northern Virginia, Oregon, Osaka, Paris, Salt Lake City, Santiago, Seoul, Singapore, South Carolina, Stockholm, Sydney, São Paulo, Taiwan, Tel Aviv, Tokyo, Toronto, Turin, Warsaw, Zurich.

Popular large-scale services that reportedly suffered as a side-effect included:

Cloudflare, Discord, Firebase Studio, NPM, Snapchat, Spotify.

To be fair to Google, the company has ‘fessed up to what went wrong, albeit wrapped in techno-babble rather than explained in plain English.

As an example, the explanation starts with the following paragraph:

On May 29, 2025, a new feature was added to Service Control for additional quota policy checks. This code change and binary release went through our region by region rollout, but the code path that failed was never exercised during this rollout due to needing a policy change that would trigger the code. As a safety precaution, this code change came with a red-button to turn off that particular policy serving path. The issue with this change was that it did not have appropriate error handling nor was it feature flag protected. Without the appropriate error handling, the null pointer caused the binary to crash. Feature flags are used to gradually enable the feature region by region per project, starting with internal projects, to enable us to catch issues. If this had been flag protected, the issue would have been caught in staging.

In blunter form, here’s Google seems to be saying:

Apparently, this series of “move slowly but break things anyway” errors was made slightly worse by one further problem, namely that the system hadn’t been designed to deal with what electricity grids would refer to as a dead start, where the system has to be brought up from complete failure.

Dead starts typically need to be handled in a carefully controlled way, rather than all-at-once, to avoid crashing all over again.

If you’ve ever had your domestic electricity trip during winter-time, you’ll know that simply turning the main breaker back on probably won’t work. The combined startup current required to restart all your high-power devices at the same time (notably devices such as heat pumps that rely on electric motors) just causes the breaker to trip again. You need to spread the load by turning on individual circuits one-by-one and waiting for each device to settle into regular operation before trying the next one.

Google’s incident report explicitly mentions exponential backoff, which loosely means doubling the time a failed process waits after each successive failure (e.g. wait 1 second, then 2 seconds, then 4, 8, 16 and so on), but anything that avoids lots of processes or servers inadvertently activating at the same time can help.

Remember: if even the behemoth that is Google can trip itself and its customers up with a null pointer error, then anyone can.

Null pointers are almost always encoded as “a memory address of zero”, denoting either that necessary memory space has not yet been allocated so the program cannot not proceed, or that an error has occurred and the program should detect and respond accordingly rather than ploughing on regardless.

Null pointer crashes therefore often happen because an error was ignored, thereby provoking a yet more serious error for which the only safe response from the operating system is to kill off the program abruptly to prevent it doing yet more harm.

Error checking in your program code is a bit like making backups: the only time you’ll ever regret it is if you forget to do it!

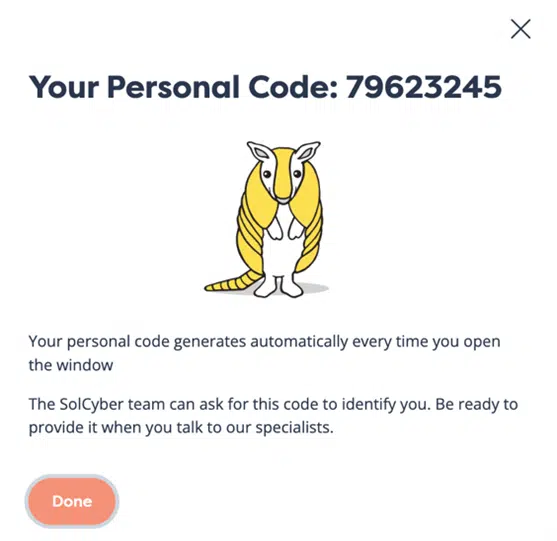

Learn more about our mobile security solution that goes beyond traditional MDM (mobile device management) software, and offers active on-device protection that’s more like the EDR (endpoint detection and response) tools you are used to on laptops, desktops and servers:

Paul Ducklin is a respected expert with more than 30 years of experience as a programmer, reverser, researcher and educator in the cybersecurity industry. Duck, as he is known, is also a globally respected writer, presenter and podcaster with an unmatched knack for explaining even the most complex technical issues in plain English. Read, learn, enjoy!

By subscribing you agree to our Privacy Policy and provide consent to receive updates from our company.