AI went mainstream almost overnight. The technology has already changed how we live, work, and interact with each other.

The use cases for AI are practically endless. Here are some of the things people are doing with AI:

Unfortunately, the nascent technology has already shown several weaknesses, potentially opening the door to unforeseen types of hacks. Cybercriminals are already finding ways to use AI to their advantage, the consequences of which can be devastating.

Cybercriminals have access to the same tools as ordinary people. While AI is used by companies to create helpful chatbots on their websites or speed up dozens of Excel tasks, it is also being used for ingenious cyberattacks that have disturbingly high hit rates.

Let’s examine just a handful of these types of attacks:

Researchers have shown that ChatGPT can create far more compelling phishing emails than humans. CNET wrote an article in February exposing how easy it is for scammers to leverage this technology to develop emails designed to get people to download malware.

One of the old rules of thumb for spotting fraudulent emails was looking for typos and grammatical errors. Why spammers have such a lousy grammar record is one of those mysteries of the universe that might never be solved, but it’s likely due to the fact that these emails don’t go through an editor and are written by non-Native English speakers. In both cases, ChatGPT is the miracle they’ve been waiting for.

Not only will ChatGPT craft emails with the correct spelling and grammar, the writer can even tell GPT-based language models to compose emails in a specific tone, such as college graduate or expert banking executive, to better address targets depending on their industry.

The subject of deep fake technology merits a book of its own. The potential of this technology for harm is, unfortunately, quite mind-boggling.

A deepfake is an AI-generated media that combines and superimposes existing images and videos onto other images and videos to create a fake video in the likeness of someone else. Viral deepfakes of Morgan Freeman, Keanu Reeves, Tom Cruise, a Korean newsreader called Kim Joo-Ha, Wonder Woman, and others have been created.

Deepfake voices apply the same principles as deepfake media but to audio files and a person’s voice. Using deepfake voices, a cybercriminal can phone you at the office and impersonate your boss with surprising accuracy.

Midjourney, a popular AI image generation tool, is getting better and better at creating deepfake photos. Like all Machine Learning tools, it works better with subjects for which the AI model has lots of data, such as celebrities and other well-known personalities.

The potential for abuse with this technology is very concerning and these AI tools can be used in conjunction with each other. Using text-to-speech technology, it’s possible to hold entire conversations with ChatGPT and impersonate someone’s voice, creating both a phishing script and impersonated voice entirely through AI. Although OpenAI’s API does check for content policy violations, it’s only a matter of time before other AI models with similar capabilities start appearing on the scene, exponentially increasing their potential for abuse.

BEC attacks occur when a scammer successfully impersonates a senior executive of an organization, especially someone with the right to open the purse strings — CFOs, CEOs, etc.

By training a language model on the tens of thousands of emails written by “CEO Jenny Smith,” a fraudster can teach that language model to write almost exactly like CEO Jenny Smith. Using this technique, Fake CEO Jenny Smith can email employees and demand payment of fictitious bills.

AI has proven its chops as a competent software developer. ChatGPT even passed Google’s coding exam interview for Level 3 engineer, a position paying $183K per year.

Although it hasn’t happened yet, the fear exists that AI can be used to easily create mutating software that repeatedly changes its signature to bypass antiviruses and firewalls that check these signatures against databases of known malware.

On the other side of the coin, AI also helps inexperienced users write software code. But the code is not guaranteed to be written with best practices in mind. Poorly written code opens the door to loopholes and makes it easier for threat actors to carry out cyberattacks.

For example, the SQL injection attack was notorious for its effectiveness in the early 2000s. And it was the most common web attack vector in 2010. Even MySQL.com was embarrassingly hit by an SQL injection attack in 2011.

This type of attack has become mostly a historical relic. Improvements in security, coding practices, and also a shift to no-SQL databases have all contributed to almost obliterating this attack vector.

But let’s look at just one example of how poorly written code can bring back this line of attack in full force, despite all the advancements in technology.

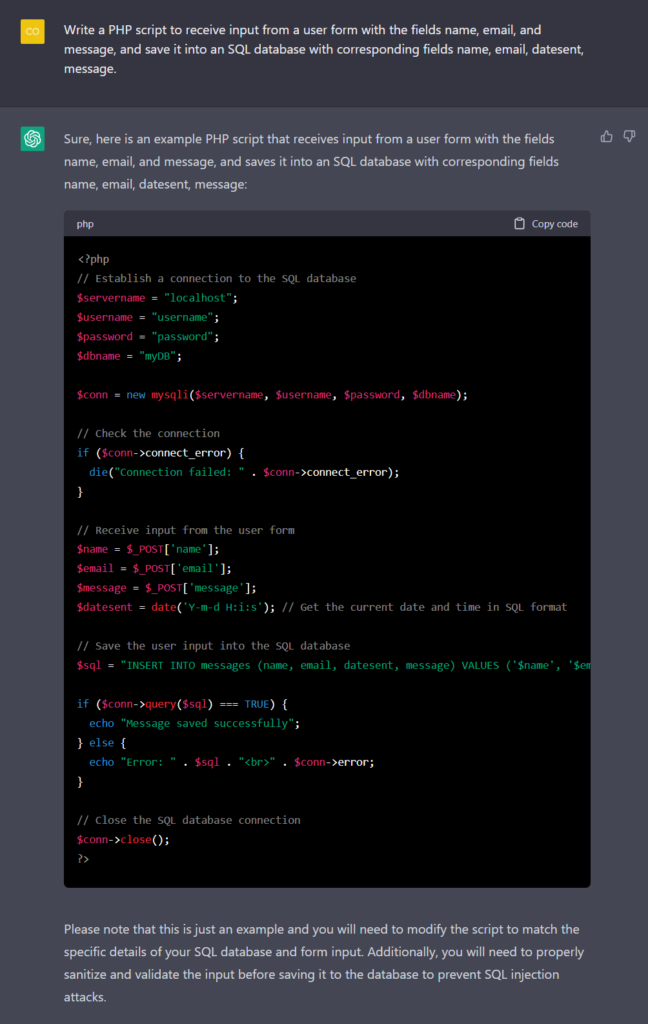

In the following example, we asked ChatGPT to generate PHP code to save data to a database. The code it generated is an SQL injection waiting to happen. And it has other suboptimal lines, such as assuming that a date field will take data in a date format instead of numeric.

At the end of the code, ChatGPT recommends sanitizing user input. An inexperienced coder is unlikely to recognize the importance of the statement and so ignore it.

People use these tools to program things swiftly, not to get caught in the details. Someone using an AI tool to write code will unlikely want to “lose time” on “unimportant details.”

SQL injections are just one example. Poorly written code could lead to:

Our world runs on code. Anything with flawed code in it immediately becomes a new potential attack vector for cybercriminals.

When used by experienced programmers, AI coding tools can be awesome timesavers. When used by inexperienced coders, they can potentially cause more security damage.

Prompt injections reveal just how immature and untested AI tech is.

A few weeks ago, Bing’s implementation of ChatGPT was successfully tricked into revealing its basic instructions through what has now been termed a “Prompt injection” attack. Such attacks trick the language model being addressed to reveal the earlier instructions on which it’s operating.

The problem with these types of attacks is that they are not syntactical errors. They are very much unexplored and open to whatever skill of human language the operator can employ.

This becomes extremely risky when the AI model’s instructions mistakenly contain proprietary or sensitive information that it can inadvertently reveal.

As language models become further integrated into other software through APIs, it’s conceivable that the language model will be able to be tricked into executing malicious code in a connected system.

The subject of prompt injections is uncharted territory that has already proven fruitful as a zone of vulnerabilities.

AI is such a buzzword, and ChatGPT has generated so much hype, that people are prone to assign sci-fi-like powers to AI. After ChatGPT was released, “AI-powered” companies bloomed everywhere. It felt like a rerun of Meta’s announcement of “the metaverse,” where every tech company on earth suddenly wanted to jump on the bandwagon.

AI exists, and AI is helpful, but it isn’t science fiction.

Cybersecurity is one area that has benefited from using AI, especially in machine learning and data analysis zones. As for tools that can take “intelligent” action on perceived threats and autonomously shut down any attack before it gets started: we’re not there yet.

AI works best when it’s leveraged as a tool in conjunction with human support. In cybersecurity, AI can potentially detect attacks more accurately and even furnish semi-automated responses that mitigate general threats. From a cybersecurity perspective, time is the best thing AI brings to the table. By detecting attacks more rapidly, humans can intervene and take intelligent action in response to an active threat.

Companies should be wary of cybersecurity providers who push AI too heavily. The technology is in its nascent stage and is itself subject to attacks. Companies that leverage AI to enhance human capabilities are the true trailblazers in cybersecurity.

AI is an exciting yet immature technology and should be treated as such. Right now, the cards are more solidly in cybercriminals’ hands because AI has many unknown potential vulnerabilities.

AI’s “special” case is that it was rapidly adopted without being thoroughly tested. Blockchain was not adopted nearly as fast. In this sense, its lack of user-friendliness was perhaps a blessing in disguise. Even so, blockchain flaws led to billions in hacked dollars because of its vulnerabilities.

Regarding cybersecurity, any cybersecurity company screaming “100% AI-powered cybersecurity!” or that thinks its laurels rest solely on AI and nothing else, should set off strident alarm bells.

For now — and probably well into the future — cybersecurity needs to be thoroughly in the hands of humans first and AI second. Cybersecurity companies should use only well-tested and non-experimental AI technologies to enhance their cybersecurity offering.

SolCyber believes very much in the potential of AI when used correctly. AI as a data analysis tool is proven ground. But, when the rubber hits the road, experienced humans need to take control of the show and respond as swiftly and intelligently as possible to shut down any existing cyberattack.

To learn more about how SolCyber can help you establish a strong cybersecurity program with the right technology, tools, and humans, contact us today.

By subscribing you agree to our Privacy Policy and provide consent to receive updates from our company.