The rapid evolution of artificial intelligence has delivered powerful new capabilities in the world of cybersecurity. The latest buzz surrounds agentic AI; systems that not only process and react to information but also plan, reason, and act autonomously to solve problems. In the context of cybersecurity, this means AI tools that can scan logs, detect anomalies, isolate suspicious files, or even patch vulnerabilities without a human ever lifting a finger.

Sounds like a dream, right? For some companies, it may even sound like a replacement for a fully staffed security operations centre (SOC).

But here’s the reality: while agentic AI is undoubtedly transforming cybersecurity, it’s not a substitute for human expertise. In fact, it could expose your organization to deeper vulnerabilities. Let’s talk about why.

Agentic AI differs from earlier generations of AI that were largely reactive. Think of it like the difference between a calculator and an executive assistant. Traditional AI would process rules-based inputs and return outputs, useful but limited. Agentic AI, on the other hand, behaves more like a junior analyst who can interpret goals, initiate actions, and adapt plans based on changing information.

In cybersecurity, agentic AI can:

It’s active, not just reactive. Strategic, not just statistical. But is it infallible? Far from it.

Despite these advances, the human element remains critical. Here are four key reasons why agentic AI will never entirely replace the role of human security professionals.

Agentic AI can detect that a login came from an unusual IP address at an odd hour. It can even take action to block or quarantine. But what it can’t do (at least not yet) is understand the nuances behind why that might have happened.

Human analysts bring organizational, historical, and business context to the table. They can cross-reference company operations, compliance needs, and user behavior in a way that machines can’t fully replicate.

AI thrives on patterns. But attackers excel at breaking them.

Threat actors constantly evolve their techniques, sometimes leveraging AI themselves. Phishing emails, deepfakes, and novel exploits don’t always look like previous attacks. Human threat hunters can see the story behind the signals; they think like adversaries, not just statisticians.

Agentic AI might miss:

Humans can probe the gray areas, question assumptions, and explore hunches. These are qualities agentic AI isn’t wired for.

Autonomy sounds powerful…until it’s misapplied. An AI system acting on incomplete data or misclassifications can make mistakes at scale, blocking legitimate users, killing business-critical services, or initiating unnecessary alerts that flood your team.

Humans offer a critical layer of judgment and oversight. A well-tuned SOC will validate, escalate, or override automated decisions based on experience, common sense, and stakeholder awareness.

In regulated industries, there’s also accountability. If AI disables a hospital system due to a false positive, someone is still on the hook. Human teams provide the governance and responsibility layer that AI lacks.

Cybersecurity is fundamentally about trust: between users and systems, organizations and partners, machines and their overseers.

No matter how advanced AI becomes, it can’t reassure a CEO after a breach, explain risk posture to a board, or train employees on security hygiene. These tasks require empathy, communication, and culture-building.

Even inside the SOC, trust in AI outputs is earned, not assumed. Analysts must be able to verify what AI agents do, understand how they reach conclusions, and audit decisions. In short: human-AI collaboration, not human replacement.

There’s a dangerous temptation brewing: the idea that, with the right AI vendor or automation layer, cybersecurity can be a “set and forget” element. This is a myth.

Agentic AI should be thought of as a force multiplier, not a magic wand. When used correctly, it can:

But without human strategy, oversight, and creativity, even the best AI will hit a ceiling.

The most secure organizations of the future won’t be those that replaced people with AI. They’ll be the ones that built collaborative ecosystems where human judgment and machine precision reinforce each other.

Agentic AI represents a massive leap forward in the tools available to security teams. But it is not a destination, it’s a catalyst.

The future of cybersecurity is hybrid: smart machines guided by smart humans. SOCs will become more strategic, less reactive. Analysts will become orchestrators and investigators, not just responders. And AI will evolve to assist, not replace.

So yes, adopt agentic AI. But do so with clear eyes and a strong human backbone. Because in cybersecurity, the most dangerous vulnerability is misplaced trust—including trust in your own tools.

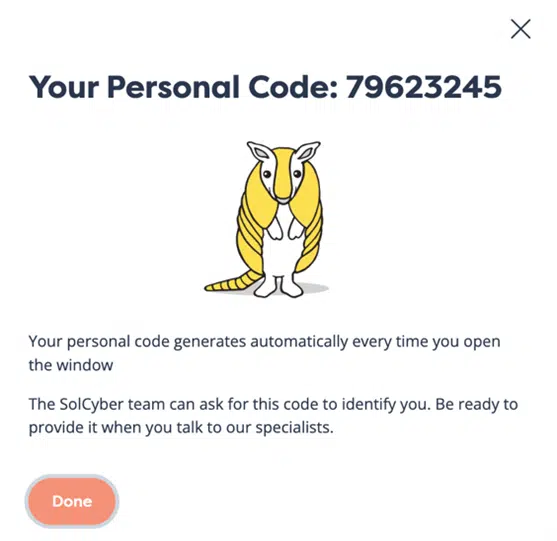

If you’d like expert guidance on how to implement a human-led, AI-augmented security strategy, consult SolCyber, a fully managed MSSP that is human-led by design and built to help organizations navigate the evolving cyber threat landscape with confidence.

Photo by Rubidium Beach on Unsplash

By subscribing you agree to our Privacy Policy and provide consent to receive updates from our company.