ChatGPT’s release in late 2022 brought the AI industry out of obscurity, turned Nvidia into the fourth-largest company in the world, and generally reawakened the stock market.

Yet it didn’t take long for the naysayers and FUD (Fear-Uncertainty-Doubt) mongers to start spreading terrifying news about AI’s risks—everything from supposedly AI-created malware to robots developing general intelligence and taking over the world. Seriously.

However, 1.5 years later, we can now take a step back, evaluate what’s happened, —and what hasn’t— and speak with some degree of authority on what AI’s true risks are, as well as what’s just noise that’s not worth paying attention to.

Because our strength is cybersecurity, in this article we’re going to take a deep dive into the cybersecurity aspects of AI—comparing the noise (hype) with the reality.

A quick Google search reveals a rash of scare headlines claiming that “ChatGPT just created malware.” Most of the headlines come from the blogs of companies selling something related to malware removal. Very few reports came from reputable news sources. If they did write the story, it was often clickbait that never amounted to any real risk.

On the subject of AI-generated malware, we can confirm that the bark is much worse than the bite. The idea that generative AI (GenAI) will unleash an entirely new breed of malware/ransomware/exploit kits, simply isn’t true.

Speaking at an RSA conference in San Francisco, Vicente Diaz, a security researcher at Google-owned VirusTotal, told attendees that no concrete evidence exists that recent malware was created through the assistance of GenAI. Even if it had been, he explained, none of the malware they’ve seen has been different from what humans have been creating for years.

The only way to unmistakably detect an AI-created piece of malware is if the malware is so wildly different from anything ever seen before that it clearly originated outside of human capabilities.

Nothing close to that has appeared.

Diaz also clarified that hackers are unlikely to make significantly better returns using AI because “the bar is already so low for hackers to commit cybercrimes.” For example, Verizon’s 2023 data breach report reveals that 74% of all data breaches involve the “human element”—social engineering, clicking links in phishing emails, credential theft, and so on. Hackers don’t need superintelligent malware to succeed with cybercrime. They’re doing quite well by preying on people’s ignorance.

Given AI’s current capacity, we may not see the risk of AI-generated malware turn into reality any time soon. Generative AI produces derivatives from what humans have already created; so the concept that GenAI, in its current form, can devise original work is entirely misguided. GenAI isn’t intelligent, despite what many headlines say, it just follows statistically probably pre-existing patterns.

The bottom line: if hackers do need highly sophisticated malware code, they’re more likely to use existing toolkits to achieve it.

Another buzzy theme is “jailbreaking.” The term harkens back to the rebellious days of iPhone jailbreaking, where users bypassed Apple’s lack of root privileges, allowing them far more control of their phones. Jailbroken iPhones meant users could change carriers, download unapproved apps, and customize the phone’s appearance.

“Jailbreaking” ChatGPT is nothing like this, and it shows just how influential a buzzword can be in skewing people’s perception of reality.

Jailbreaking iPhones meant you had achieved a position of escalated privilege. “Jailbreaking” ChatGPT only means that ChatGPT provides answers beyond its training data—it doesn’t give you any escalated privileges that allow you to wield ChatGPT for some powerfully nefarious purpose such as executing remote code.

OpenAI, the creator of ChatGPT, does offer bounties for discovered bugs, including for anyone who discovers a way to get ChatGPT to execute Python code outside its sandbox. To date, no discovered bugs have made major headlines.

Jailbreakers can get ChatGPT to create obscene and possibly illegal material, but the real-world impact of this hasn’t been widely felt. This may be because hackers are more likely to get such illegal information from the dark web than from ChatGPT, which has been rigorously trained to avoid giving out harmful data.

To minimize jailbreaking, some companies create guardrails around a ChatGPT-powered chatbot on their website. They do this by adding hidden prompts that force the chatbot to always refer to company documents before replying. A jailbreaker might be able to get that chatbot to reply with data from those company documents that shouldn’t be shared. This is easily avoided. Just don’t give your public-facing chatbot access to internal documents. However, this brings up a real risk that companies are exposed to when using AI-powered chatbots and similar tech.

Most organizations utilize AI through large language models (LLMs), the underlying program powering text-based generative AI products like ChatGPT and the automated support systems many organizations use on their sites. While jailbreaking doesn’t pose much of a real risk, these LLMs can be compromised and are actively being targeted.

OWASP—the Open Worldwide Application Security Project—recently released its Top 10 critical vulnerabilities for LLMs (large language models). Considering the prominent use of these LLMs by most organizations, it’s important to know what new risks these companies face by leveraging this new AI technology.

At the time of this writing, the top 10 risks are:

The prevalence of LLMs in organizations has made them an attractive attack vector. Hackers know that investing time in these attacks is likely to bear fruit because of the growing number of opportunities. All the above threats can result in reputational loss, sensitive assets being released, code exposure, and even embedded threat actors.

Poisoning training data is also a side-vector risk that can lead to direct and lasting damage. This occurs when the data that the LLM is using is compromised or damaged, leading to false or inaccurate results that render the LLM useless. For example, one security researcher managed to get developers to include bogus, hallucinated code into their projects 32,000 times, demonstrating how impactful data poisoning can be.

GenAI’s lack of actual understanding (and probably even actual intelligence) has been demonstrated repeatedly. Ask ChatGPT to finish the phrase “The cat sat on the…” with a four-letter word and it will always write “mat.” Contrary to how it’s described, GenAI doesn’t “think,” rather it predicts answers based on earlier tokens. “The cat sat on the mat” has appeared on the internet millions of times which is why “mat” is the answer ChatGPT gives. It’s trained to predict the word that humans are most likely to use next in a series of words and phrases based on the prevalence within its training data.

While often inaccurate, this same prediction skill is what makes ChatGPT’s conversation appear so believable. It’s called “Chat” GPT because OpenAI trained it to predict “chatty,” human-sounding responses. When Facebook trained its Llama-2 models, it released a “chat” and “non-chat” version. Each responds differently. The “chat” version is always more human-sounding.

Part of the ChatGPT illusion is to make people feel that chatting with ChatGPT is like chatting with a human. OpenAI has done a spectacular job at this, and it is why it’s being used as part of impersonation and phishing attacks.

The ability to generate realistic, friendly, convincing, urgent, human-sounding text makes GenAI a formidable tool in the hackers’ arsenal for creating phishing emails. Hackers can prompt ChatGPT to create urgent-sounding emails without typographical errors—which have typically been the telltale sign of scam emails in the past.

Similarly, generated text can now be converted into audio, giving hackers the ability to create realistic, deepfake content to convince users to click on a phishing email. As tools grow more abundant, this risk becomes ever greater. Deepfakes are already being used in targeted attacks, as was the case with a LastPass attack earlier this year where a CEO was being impersonated through deepfake audio.

However, despite these new applications, it’s important to remember that staying protected in these cases comes down to the same time-tested fundamentals that have protected organizations from phishing and social engineering in the past. That means implementing sufficient organizational checks and training to help users recognize social engineering attacks when they occur. Even the most convincing email or deepfake will be stopped if the right processes are in place to verify unauthorized transactions.

Like the cloud before it, AI is creating new attack surface opportunities and organizations are best served by knowing which “risks” are more noise than reality and which are worth paying attention to. We can also learn from the cloud era in terms of managing risks, vulnerabilities, and staying protected. The core fundamentals still apply here, and it’s important to remember that AI providers aren’t so new that we’re defenseless against these new risks.

Consider your AI service providers as you do any other supply-chain vendor: vet each one accordingly, understand their security posture, and ensure they’re not moving so fast that they’re putting your data and your organization at risk.

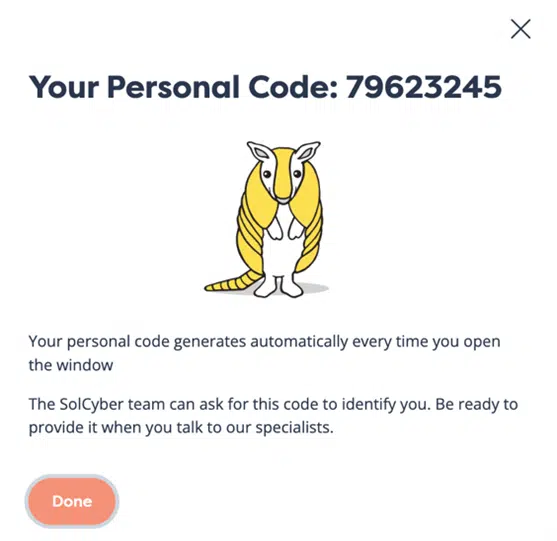

It’s up to you to do your due diligence to understand the risks of incorporating AI into your business while still ensuring you’re protected. To get a better understanding of the AI risks your organization might face and how to have proactive cyber resiliency, reach out to us at SolCyber and we’ll be glad to assist you.

By subscribing you agree to our Privacy Policy and provide consent to receive updates from our company.