Google created its Android ecosystem to compete directly in the mobile device market against Apple’s iOS, which was released in 2007 for the explosively popular iPhone.

Until Android and iOS arrived on the market, mobile phone operating systems had typically been built on proprietary, closed-source platforms.

Nokia, BlackBerry and Microsoft, for example, had Symbian, BlackBerry OS and Windows CE.

In contrast, Apple’s iOS was directly derived from macOS (then known as OS X), which is based on the open-source Mach kernel and FreeBSD core.

Android was based on the open-source Linux kernel, a free reimplementation of the original closed-source Unix code.

Disappointingly, perhaps, at least to open source supporters, neither Google Android nor Apple’s iOS are open ecosystems, despite their open-source foundations.

Google, to be fair, publishes the Android Open Source Project (AOSP), which provides a very basic software system for compatible mobile devices, including recent Google phones – a sort-of minimalistic mobile Linux distro, if you like.

And Apple publishes its XNU kernel code (short for X is Not Unix), but this can’t be turned into a working firmware distro , and would be unusable on an unmodified iPhone anyway, because the iPhone hardware is locked down to stop it running firmware that doesn’t originate from Apple itself.

Occasional security holes known as jailbreaks surface that allow enthusiasts to bypass Apple’s firmware lockdown.

But as Apple devotes ever more effort to hardware and software protection against jailbreaking, for commercial protection as much as for cybersecurity, these are showing up in public less and less frequently.

Jailbreaks generally rely on one or more very-hard-to-find zero-day security vulnerabilities that Apple patches as soon as the jailbreak is made public.

The result of this that iPhone zero-day exploits are now worth huge amounts of money as long as they are not disclosed for others to use.

Apple itself offers bug bounties of up to $2 million, while controversial “legal malware” vendors such as NSO Group, which sells iPhone spyware tools to intelligence agencies and other organizations, charge eye-watering fees.

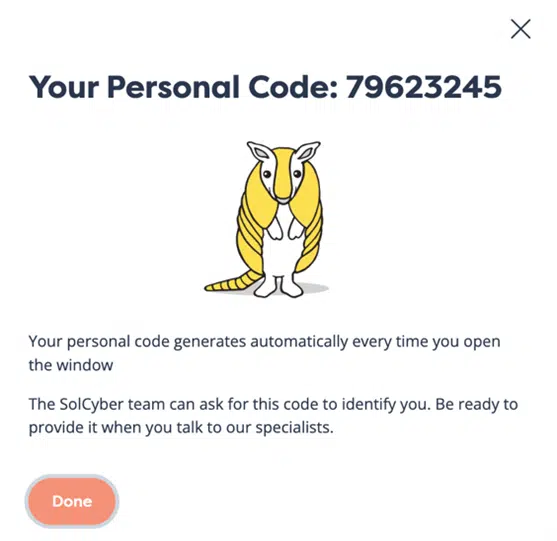

If you’re a LinkedIn user and you’re not yet following @SolCyber, do so now to keep up with the delightfully useful Amos The Armadillo’s Almanac series. SolCyber’s lovable mascot Amos provides regular, amusing, and easy-to-digest explanations of cybersecurity jargon, from MiTMs and IDSes to zero-days and jailbreaks.

Even if you know all the jargon yourself, Amos will help you explain it to colleagues, friends, and family in an unpretentious, unintimidating way.

Given how expensive it is to acquire a jailbreak, and how zealously Apple will patch it once it becomes known outside cybercrime or spyware circles, rogue programmers aiming to attack iPhone users often try to create booby-trapped apps instead.

By building apps that appear to be harmless, perhaps even modestly useful, and hiding rogue behaviors so they won’t easily be noticed, cybercriminals hope to infect iPhone users with malware.

But getting malicious apps onto an iPhone isn’t a trivial exercise, because Apple’s anti-tampering features extend to third-party apps as well as to the operating system itself.

The company’s own App Store, plus officially-sanctioned alternative app markets in the EU, are the only official sources of add-on software.

Developers need to register and sign formal contracts with Apple (even hobbyists and researchers need to pay $99 a year), and to accept Apple’s fees for distributing their software or processing their in-app purchases via the store.

Apps must be uploaded to Apple first, and won’t be approved and published in the App Store until they’ve passed various automated safety checks.

Would-be malware peddlers therefore have to sidestep Apple’s testing and validation first.

This locked-down approach to third-party apps hasn’t stopped malware-infected files from making it into Apple’s mobile ecosystem, but it has kept the flow of iPhone malware to a stream rather than a flood.

Companies with at least 100 employees may qualify for the Apple Developer Enterprise Program, which provides a controlled environment for a business to install proprietary apps of its own on designated company phones. Developers can register a very small number of test devices directly with Apple to run in “developer mode,” where private test builds can be used for development purposes. And vendors can use a program called TestFlight for up to 10,000 registered users to try out time-limited beta builds that haven’t yet made it into the App Store. Criminals can and do abuse these special-case tools, but malware victims are admittedly much harder to attract in these cases.

In contrast, Google Android users are many times more likely to suffer a spyware or malware attack , primarily because Google’s equivalent of the App Store, known as Google Play or just the Play Store, is optional.

Users can choose, admittedly not by default but easily nevertheless, to allow software from alternative online app markets, which can be web servers run by almost anyone, in almost any country of the world.

They can also sideload apps of their own, or apps given to them by someone else, simply by connecting their latop to their Android device via a USB cable and following some fairly straightfoward command-line incantations to copy the app directly onto the device.

Nevertheless, Android users who stick carefully to Google Play are generally better off that those who don’t, because Google Play software can only be uploaded by registered developers, who need to:

Those who don’t stick to the Play store are at much greater risk.

Google recently admitted, in a blog article entitled A new layer of security for certified Android devices (boldface emphasis below by Google) that:

Our recent analysis found over 50 times more malware from internet-sideloaded sources than on apps available through Google Play.

As a result, Google says that it will soon be adopting an approach that is a sort-of halfway house between Apple’s strict lockdown and Google’s own more libertarian approach:

To better protect users from repeat bad actors spreading malware and scams, we’re adding another layer of security to make installing apps safer for everyone: developer verification.

Unfortunately, that seems to mean that you’ll need to sign up as an officially-registered developer, sharing identity documents with Google if required, and possibly paying a $25 joining fee.

Even if you only intend to write apps that you and a few friends will use, and don’t plan to submit them to the Play Store, you’ll still have to sign up formally with Google.

If you’re an independent Android programmer, a hobbyist, or a researcher, Google has published this proposed timetable:

You can access a set of official presentation slides, entitled Introducing the Android Developer Console: A first look, which explains (page 4):

The new documents suggest that students and hobbyists will be able to create a “special type of account,” with fewer verification requirements and no need to pay $25.

(There isn’t yet information about any limitations that might apply to these special accounts, such as only installing onto pre-registered devices, or being locked out of some operating system features.)

Of course, this announcement also acts as bad PR for Google Play, given the finding above that unregulated apps are up to 50 times more dangerous (that’s 5000%) than software from the Play Store.

After all, you can turn that 5000% number on its head, by calculating 1/5000% = 2%, and rewrite this finding in the form of a reciprocal:

Even checked and verified Google Play apps turn out to be infected with malware up to 2% as often as apps on off-market download sites.

Not all developers are happy about this change, especially if they chose Google over Apple for Android’s openness, but Google seems determined to do it anyway.

Even after this system of developer vetting comes in, however, it’s still worth taking these steps:

Learn more about our mobile security solution that goes beyond traditional MDM (mobile device management) software, and offers active on-device protection that’s more like the EDR (endpoint detection and response) tools you are used to on laptops, desktops and servers:

Paul Ducklin is a respected expert with more than 30 years of experience as a programmer, reverser, researcher and educator in the cybersecurity industry. Duck, as he is known, is also a globally respected writer, presenter and podcaster with an unmatched knack for explaining even the most complex technical issues in plain English. Read, learn, enjoy!

By subscribing you agree to our Privacy Policy and provide consent to receive updates from our company.