In a recent article entitled Credit card scams in the age of mobile phones, we looked at how cybercriminals have been adapting as payment card security improves, and as our payment habits change.

Examples of technological and behavioral changes in the past few decades include:

Granted, card scammers who can get hold of your card data including the CVV can still abuse your account to make fraudulent on-line purchases, but you’d be forgiven for assuming that these technological precautions make so-called card-present fraud impossible to pull off.

(That’s where a crook goes into a series of high-end stores such as jewelry outlets, buys high-value items on your account at each one, and walks out with them right then and there, with no delivery address needed, no delivery delay, and no risk of the delivery being staked out.)

After all, even if the criminals burgled your home unnoticed while you were out and stole the card you had left behind, they wouldn’t know the PIN, and if they pickpocketed your phone they wouldn’t know the code to unlock it.

Neither the PIN nor the lock code is stored anywhere on the card or your phone, and neither your phone nor the card chip is likely to give up its secrets without being unlocked first, even under an electron microscope.

Even if the criminals resort to robbery to get your phone, by swooping past on a bicycle or motor scooter and snatching it off you while you’re actually using it, they’re still unlikely to be able to use your wallet app, because that needs the lock code (or your face or fingerprint) a second time to authorize payments.

But wait!

As we explained in that earlier article, criminals have figured out a weak link in the “wallet app” ecosystem, namely that they don’t actually need your payment card’s PIN, or access to the physical card itself.

They don’t need access your phone, with or without its lock code, or to any of your Apple or Google accounts.

As long as they can get hold of your basic card details (name, number and expiry date), plus a small amount of personal data such as billing address, plus just one multifactor authentication (MFA) code from your bank…

…they can add YOUR card to THEIR wallet app, on THEIR phone, protected by THEIR lock code.

Simply put, they can turn a mobile device that they already own and operate into a tool for authorizing THEIR purchases against YOUR account, while your card and your phone remain safely in your own possession.

And, for the most part, they can get all that personal information using old-school phishing attacks.

They just need some sort of believable pretext to lure you into putting your card details, home address, and current MFA code into a phoney website under the pretense of processing a modest payment that you might not consider unusual or dishonest.

Typical lures that currently seem to be working for these scammers include fees for rescheduling a home deliveries, toll-road charges where the automated billing system didn’t work, or so-called parking “fines” levied by one of the many privatized parking “enforcement” companies now used around the world by shopping centers, service stations, airports, and even public-sector bodies such as municipalities.

Being resistant to phishing attacks is therefore just as vital as ever, so it’s worth looking at some of the above-and-beyond tricks that can help attackers get hold of personal data even if you don’t think you gave anything away.

Indeed, there’s an interesting phishing tactic used by those phone wallet scammers we looked into last time that was uncovered in the original research we wrote up.

We didn’t cover this before because it’s a phishing tactic rather than a wallet-app tactic, but it’s definitely worth digging into now, because of the high-value criminal activities that it enables when used by the wallet scammers.

As we described last time, these scammers make every effort not to miss out on MFA codes that their phishing activities acquire.

They do this by not relying entirely on automation, but by going in for so-called human-led attacks, where criminal operators work in shifts, in front of a rack of 30 or more phones each, reacting instantly when their phishing tools report that a new victim has entered the trap.

This means the criminals are on hand to react right away, and even to try out data from partially-completed web forms where the victim was lured in for a while, but bailed out before pressing the final [Pay] button.

To be clear, it’s important to bail out from a phishing site right away if you suddenly figure out half way through that you should have stayed clear.

But it’s much better not to go near it in the first place if you can possibly help it, and here’s why.

We’re going to show you how easy it is to pull off the sort of tricks that these phone scammers are using, whereby even a half-filled web form that you then abandon nevertheless gives away valuable personal data.

We’ll start with a basic web form that invites you to put in a credit card number, expiry, and CVV before clicking [Pay] to proceed:

In a real attack, a web form of this sort would generally look much more realistic, not least because free tools exist that will automatically make pixel-perfect copies of legitimate sites, including their pay pages.

Here, however, we’re not studying the art-and-design side of phishing, or hoping to pass ourselves off believably as someone else – we’re just showing you some simple tricks that, sadly, are widely used by legitimate and crooked sites alike.

We’ll be using the the browser’s own built-in features to log keystrokes, mouse movements, and more, as well as to create misleading action buttons.

On this page, the browser captures the data you enter with any scripting or in-page trickery, and only interacts with the outside world if you press the [Pay] button, which we showed above, labeled with the attribute type="submit" in the HTML source:

Because the <form> tag has an attribute specifying action="processed.html", the filled-in form is automatically uploaded under that name to the same website that the HTML originally came from.

Although all the data, including the secret code (CVV), is sent over the connection, payment processing rules expect that the site handling the payment data will follow various security rules.

Notably, a compliant payment site will not keep the CVV after it’s been used (ideally it should only ever be held temporarily in memory and expunged when no longer needed), and will always ensure that the data is transmitted over a encrypted connection, typically by using HTTPS to upload it.

Technically, the test server we used to capture the upload in the image above is not complying with the rules, because the uploaded data, including the CVV, has clearly been recorded in a logfile from which it could later be leaked or breached.

The action attribute can specify a full URL, as we shall see shortly, which could be on a different site altogether. Annoyingly, browsers won’t show you the URL to which a form will be submitted before the data gets sent. But any attempt to divert an HTTPS page to a non-HTTPS form will produce a security warning, because that means your data would be sent unencrypted for anyone along the way to sniff out and steal.

Unfortunately, tweaking this simple web page to increase its malevolence is surprisingly easy.

Here, we have made two small changes to the pay-page form that indistinguishably alter the form’s behavior in two alarming ways:

addEventListener() function you see in the green text below is ripe for abuse, as we shall now see.These tricks work in both regular and mobile browsers, which we verified by accessing our test server from an Android phone.

(We tried an iPhone as well, and the results were as good as identical.)

Note that all keys can be logged, including [Backspace], [Tab] and the cursor keys.

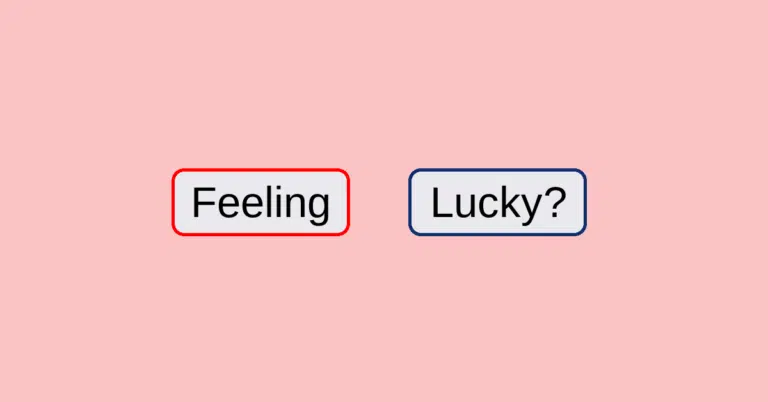

Also note that the data we entered was submitted in a final request at the end, even though we tapped on the dishonestly-named [Cancel] button in the hope of bailing out:

There’s plenty more we can do, including adding a trap for the mouse.

Here, we’ve added additional script code to react if you move your mouse over either of the buttons, whether you ultimately click on them or not.

Note how easy it is, from a programming point of view, to set up “mouseover” actions for individual parts of the page, no matter how the browser finally decides to display it.

We don’t need to keep track of pixels, or take font sizing into account, or worry about screen width, because the browser’s own rendering engine keeps track of those details for us:

We’ve also rigged up our web server to track victims when they arrive at the site, by setting an innocent browser cookie that’s unique for each new visitor.

Basic tracking cookies of this sort are widely used on modern websites, even those that don’t require you to log into a pre-existing account, for example if they offer any sort of site configuration options such as “don’t ask me about free offers again,” or “use dark mode with slightly larger text sizes.”

Note how the cookie makes it easy for our server to see which keystroke captures go with which victim’s session, even if there are several victims on the hook at the same time:

In case victims get suspicious, whether they clicked [Pay] or [Cancel], an attacker can also provide a decoy landing page at the end of the scamming process to suggest a safe ending.

For example, if the criminals are after your card data and an MFA code, they face a dilemma of what to do about the fake payment they used as a lure.

They could simply forget about the transaction and hope you don’t check whether it actually went through, or they could aim for total verisimilitude by try to putting through a transaction on your card that exactly matches the one they referred to in their phish.

But both of these approaches have problems:

Instead, the attackers can simply redirecting you to a totally bogus page at the end that assuages any suspicions you may have had by pretending that the transaction didn’t go through.

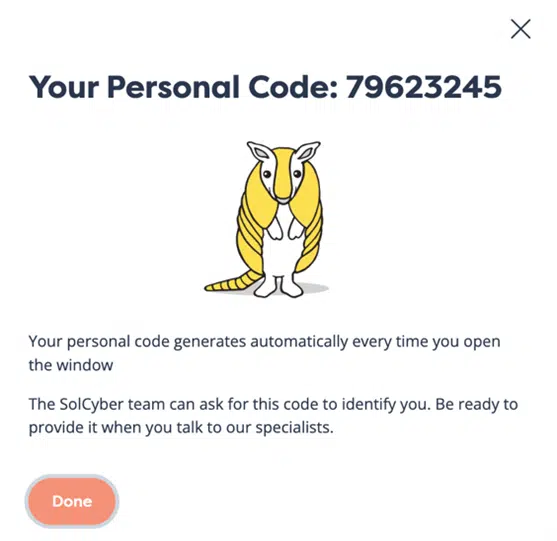

Here, we have demonstrated this trick in an ironic way, by presenting a fake security notification that makes it look as though no harm was done:

And if you decide to check your suspicions later on, the rogue website can use the victim cookie it set earlier (or match your your network address, in case you clear the cookies from your browser) to make it hard for you visit the site’s real pages again and thereby get copies for analysis:

Learn more about our mobile security solution that goes beyond traditional MDM (mobile device management) software, and offers active on-device protection that’s more like the EDR (endpoint detection and response) tools you are used to on laptops, desktops and servers:

Paul Ducklin is a respected expert with more than 30 years of experience as a programmer, reverser, researcher and educator in the cybersecurity industry. Duck, as he is known, is also a globally respected writer, presenter and podcaster with an unmatched knack for explaining even the most complex technical issues in plain English. Read, learn, enjoy!

By subscribing you agree to our Privacy Policy and provide consent to receive updates from our company.